Final

Report

May 12, 2017

We analysed solutions for parallel memory allocation and implemented a lock free memory allocator.

We used the GHC machines at CMU to generate results for our analysis.

Background

Summary

For the analysis of existing parallel dynamic memory allocator we used the following benchmark tests (courtesy Hoard) -

-

Larson

-

One Thread allocates and frees random sized blocks [8-4096 bytes] in random order, then an equal number of blocks is handed over to each of the remaining threads

-

Intended to simulate server workloads

-

Captures robustness of malloc’s latency and scalability under irregular allocation patterns

-

-

Thread Test

-

Each thread performs 1000 iterations of allocating 10,000 blocks [8-4096 bytes] and freeing them in order.

-

Capture allocator latency and scalability under regular private allocation patterns

-

-

Linux Scalability

-

Each thread performs 10 million malloc/free operations on blocks ranging from size 8 - 4096 bytes in a tight loop

-

This also tests the latency and scalability of the allocators.

-

We ran these benchmark tests for all the above mentioned memory allocators. We varied the following parameters to perform our analysis :

-

Number of threads (1 - 16)

-

Allocation size (1 - 4096 bytes)

-

Number of iterations to perform for each of the benchmark

APPROACH

Analysis

Dynamic memory allocation in a parallel work environment relies on mutual exclusion locks in order to provide and protect consistency across shared data structures. Nevertheless, using locks and mutual exclusion techniques reduces performance and impacts program flexibility. A lock-free memory allocator guarantees progress regardless of whether some threads are delayed or even killed and regardless of scheduling policies. The dynamic memory allocators that we analysed are -

-

Jemalloc : Jemalloc is general purpose memory allocator that uses multiple arenas which manage memory completely independent of each other to reduce lock contention. It provides thread specific caching and uses memory chunks with small, large and huge object sizes. Each of the object types have specific size classes. Multiple small and large objects can lie within a single chunk whereas huge objects each have one or more chunks backing them. The allocation is tightly packed together which can lead to false sharing. Jemalloc works well when the workload is contention heavy. The disadvantage of jemalloc is its memory usage as it uses power-of-two sized bins which leads to a greatly increased memory footprint compared to other allocators.

-

Tcmalloc : Tcmalloc is a low latency memory allocator. It assigns a local thread cache to each thread. It is space efficient in terms of representation of small blocks (only 1% space overhead). There is almost zero contention for small blocks as each thread allocates memory from its respective local cache. Large objects are allocated directly from a centrally maintained heap using a page-level allocator which employees fine grained and efficient spin locks. Tcmalloc maintains 170 allocatable size classes which are spaced such that small sizes are separated by 8 bytes and larger sizes are spaced by 16 bytes, even larger sizes are spaced by 32 bytes and so forth. The maximal spacing that tcmalloc employees is 256 bytes.

-

Hoard : Hoard allocator aims at avoiding false sharing. Each thread maintains its own heap. A global heap exists which can be accessed by all threads. It maintains usage statistics for each heap, which are the amount of memory in use in a heap and the amount of memory allocated from the OS to that heap. Memory is allocated in chunks called superblocks. Each superblock contains some number of blocks and a free list of available blocks is maintained in LIFO order. Large memory blocks are directly obtained from the OS and freed to the OS using mmap and munmap. Internal fragmentation is reduced by having each superblock of a particular size-class. Empty blocks are recycled by moving them from thread heaps to global heap. They are never returned to the OS. Concurrency is maintained by applying locks on these superblocks.

-

Ptmalloc : The allocator uses mutually exclusive "arenas". A thread will try to lock an arena to do the work it needs. If another thread is using that arena, then the first thread either waits, or creates a new arena. This contention between threads reduces performance and increases fragmentation in multithreaded applications. Memory cannot be moved from one arena to another. 4 Byte header for each object is maintained.

Our lock - free implementation reduces false sharing by leveraging some high-level structures from Hoard. A deadlock free system is implemented as lock-free objects are immune to deadlocks and livelocks. Our system is also tolerant to critical region threads being killed or crashing. The lock- free malloc is implemented using simple atomic instructions (Compare and Swap in particular).

Lock Free Dynamic Memory Allocator

We implemented a lock free dynamic allocator with a design and structure similar to the Hoard memory allocator. We are referencing the Scalable Lock Free Dynamic Allocator paper from IBM.

Our dynamic allocator virtually divides the memory into multiple size classes for small blocks. Large blocks are allocated and returned directly from/to the Operating System. Each size class comprises of N processor heaps (N is the number of processors on the machine). All threads request memory from the processor heap that corresponds to the processor on which the thread is executing from the respective size classes.

Each processor heap further contains superblocks, superblocks are a collection of blocks of size size-class. At any point in time, there will be only one “active” superblock in the processor heap. All allocations for the respective size-class for threads running on one processor will be served by the same superblock until all blocks in the superblock have been allocated. This reduces false sharing since threads running on the same processor can benefit from spatial locality.

Each superblock is associated with a descriptor (a structure) that holds the details of the superblock.

A superblock can be in one of the following states, ACTIVE, FULL, PARTIAL and EMPTY. An ACTOVE superblock is the one which has blocks available for allocation. A FULL superblock represents a superblock which has all its blocks allocated. A PARTIAL superblock is one which has blocks available for allocation but is not an ACTIVE superblock. A superblock could be in PARTIAL state in the case when a thread relieves a block from a FULL superblock. An EMPTY superblock is one which has no blocks reserved and is not the ACTIVE superblock. An EMPTY superblock is returned to the OS when it is identified to be empty.

Our implementation treats superblocks in a PARTIAL state a special manner. We maintain a list of partial blocks for each size-class. A thread running on any processor can be allocated memory from any partial superblock irrespective of the processor it is running on. This prevents us from achieving complete false sharing avoidance. It is reasonable to go with this approach as the partial blocks are contributing to internal fragmentation, and the number of blocks available in the partial superblocks will be a small number.

A typical malloc call will follow the following steps:

-

If it is the first call to malloc will initialize the static data structures maintaining details of the superblocks, processor heaps and size classes.

-

The allocator will check for an ACTIVE superblock in the respective processor heap.

-

If an ACTIVE superblock exists, the allocator allocates a block from that superblock and updates all the structures in a lock free manner (using compare and swap).

-

If no ACTIVE block is present, the allocator looks through the list of PARTIAL superblocks and allocates the available blocks. While allocating blocks from a PARTIAL superblock, the allocator allocates as many available blocks available in the PARTIAL superblock and removes it from the list of PARTIAL superblocks. This transaction is performed in a lock free manner.

-

If no block is found in any of the above two steps, the allocator allocates a new superblock and marks it as ACTIVE.

When freeing a block, we return the block to the same superblock it was allocated from. Thus, we store the address of the descriptor of the superblock the block belongs to in the first eight bytes of the allocated block.

To update the descriptor at the time of a malloc or a free in a lock free manner we use a “tag” to avoid the ABA problem. We update the tag while we perform the compare and swap and keep track of the value of tag to identify if there is an ABA issue. If found, we redo the update process with new data.

One of the big challenges we are facing is with maintaining the list of partial superblocks. As mentioned above, we maintain a linked list of partial superblocks. We add the new partial superblocks to the list at the tail of the list and start removing partial superblocks from the head of the list.

In order to add a superblock to the tail of the list, we need to maintain the tail of the list. Thus, when adding the superblock, we need to update two pointers (tail of the list and the tail->next) in an atomic fashion. Since we are using atomic compare and swap (compare and swap only supports one element to be updated at a time) it is hard to maintain consistency of the list. Thus, we are implementing a lock using atomic compare and swap for this list. It is hard to obtain completely lock free implementation due to this.

An alternative to this would be to not maintain a list of partial superblocks and allocate new superblocks each time there is no active superblock. This approach would induce high fragmentation into the memory and will also increase the memory footprint of the allocator.

Results

We ran the analysis on GHC machines having the following specifications:

CPU : Xeon E5-1660, 8 Cores (2x Hyperthreaded), 3.2GHz, 20MB L3 Cache, 32 GB RAM

Analysis

Following are the results of running the above mentioned benchmarks on the various dynamic memory allocators -

LARSON

Parameters: (from the command line)

<seconds> <min-obj-size> <max-obj-size> <objects> <iterations> <rng seed> <num-threads>

Test Arguments: ./larson 10 8 <Max Object Size> 1000 10000 1 <Num. of threads>

-

Ptmalloc performs poorly due to constant switching of threads between arenas and the high contention for each arena and due to the fact that threads try to free blocks to arenas which are locked by other threads.

-

Hoard shows throughput drop for large object size (greater than 256) allocation due to high contention on the heap as most of the operations are targeted at the same heap. Hoard also frees blocks to remote heaps causing high contention.

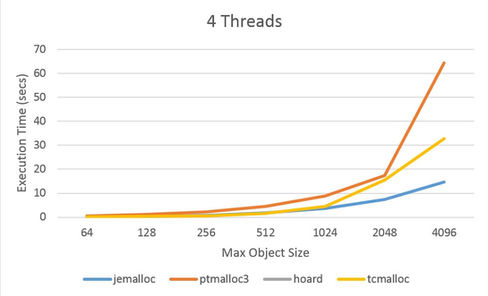

THREADTEST

Parameters: <number-of-threads> <iterations> <num-objects> <work-interval> <object-size>

Test Arguments: ./threadtest <Num. of Threads> 1000 10000 0 <Max Object Size>

-

Ptmalloc scales poorly under high contention, as it becomes more likely that threads take over the arenas of other threads when they have no free blocks in their own heap which also increasing false sharing

-

Latency increases as the block size increases for Tcmalloc as the blocks do not fit in the thread’s local cache anymore.

LINUX-Scalability

Parameters: <object-size> <iterations> <number-of-threads>

Test Arguments: ./linux-scalability <Max Object Size> 10000000 <Num. of threads>

-

On increasing number of iterations TCMalloc and Hoard gives errors as it doesn’t return memory to the OS.

-

Ptmalloc performs poorly due to lock contention

-

Jemalloc, hoard and tcmalloc scale well for this test due to their contention free designs.

-

Tcmalloc performs well for small object sizes as there is zero contention – each thread accesses its own thread cache.

Lock Free Dynamic Memory Allocator

We have implemented the lock free memory allocator which can be located at https://github.com/adityam1/malloc. We were not able to run the above identified benchmarks against the implemented lock free memory allocator since our implementation was running into some errors. The benchmark tests run heavy malloc jobs and unfortunately our implemented allocator crashes while running the benchmarks.

List of work by each students

Equal work was performed by both project members.

ReFERENCES

Hoard: A Scalable Memory Allocator for Multithreaded Applications - Emery D. Berger, Kathryn S. McKinley, Robert D. Blumofe, Paul R. Wilson

Scalable Lock-Free Dynamic Memory Allocation - Maged M. Michael

Jemalloc - https://github.com/jemalloc/jemalloc

TCMalloc : Thread-Caching Malloc - Sanjay Ghemawat, Paul Menage